How to install Anaconda, TensorFlow 2 with GPU support in Docker on Ubuntu 19.04 host

September 22, 2019

Written by Nick Rubell Subscribe on Twitter

Table of Contents

- Overview

- Prerequisites

- Install NVIDIA Container Toolkit

-

- Step 1: Make Initial Image Preparations

- Step 2: Download and Install CuDNN

- Step 3: Install Anaconda and Create the Work Environment

- Step 4: Create the environment.yml File (with TensorFlow there)

- Step 5: Apply the environment.yml File to the Work Environment

- Step 6: Create the Test to Verify if GPU is Available.

Overview

NVIDIA Container Toolkit, aka NVIDIA Docker, is supported for LTS versions of Ubuntu only, which means Ubuntu version 18.04 today. However, NVIDIA docker still works if it installed on Ubuntu 19.04. You can follow the official installation instructions and just use the nvidia-container-toolkit package for 18.04.

NVIDIA provides the official CUDA images for various versions. Pick one that is compatible with your TensorFlow version and install it.

Now install Anaconda and TensorFlow with GPU. Follow regular products instructions.

The product versions you want to use may differ from those used in the article. In this case, you can read versions considerations below.

The full source code for the project is located at Github.

Prerequisites

Before you can install NVIDIA Container Toolkit, you need to install Docker itself and the NVIDIA driver. In this article CUDA 10.0 and NVidia Container Toolkit 1.0.1 (NVIDIA Docker 2.2.0)

- NVIDIA Driver version 410.48, or later (tested with 418)

- Docker, version 19.03, or later (tested with 19.03.1)

Versions Considerations

- Each TensorFlow version requires specific CUDA version

- Specific CUDA version requires NVIDIA drivers to be at least of a certain version

- CuDNN is built for specific CUDA version

- NVIDIA Docker is supported for LTS versions of Ubuntu. However, the chance is it will also work for non-LTS versions, like 19.04 in this case.

- This article uses CUDA 10.0, CuDNN 7.6.3.

TensorFlow to CUDA Compatibility Matrix

Tensorflow 2.0 RC uses CUDA 10.0. For more information see Tensorflow GPU Support page.

CUDA, CuDNN and NVIDIA Driver Compatibility Matrix

Install NVIDIA Container Toolkit

Follow the steps for Ubuntu 18.04 at NVIDIA Container Toolkit, except set distribution variable to ubuntu19.04 as follows:

distribution=ubuntu18.04

distribution=ubuntu18.04

curl -s -L https://nvidia.github.io/nvidia-docker/gpgkey | sudo apt-key add -

curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list | sudo tee /etc/apt/sources.list.d/nvidia-docker.list

sudo apt-get update && sudo apt-get install -y nvidia-container-toolkit

sudo systemctl restart dockerNow if your run docker with --gpus all, GPU will be accessible inside the running container.

Prepare the CUDA Docker Image

We are going to use nvidia/cuda:10.0-runtime-ubuntu18.04 as the base.

Create the root dirctory. You will put all necessary files there.

In this directory create a file with name Dockerfile.

Add the following content there to use the official CUDA image as the base one.

FROM nvidia/cuda:10.0-runtime-ubuntu18.04 Step 1: Make Initial Image Preparations

- Specify where Anaconda is going to be installed.

- Name the work Conda environment.

- Set bash as the default shell for the image creation session.

We will store Conda in /opt/anaconda3 and name the environment main.

ENV CONDA_PATH=/opt/anaconda3

ENV ENVIRONMENT_NAME=main

SHELL ["/bin/bash", "-c"]Step 2: Download and Install CuDNN

On the development computer:

- Download CuDNN from the official download page.

NVIDIA membership is required. Choose cuDNN v7.6.3 (Aug 23, 2019) for CUDA 10.0, and then cuDNN Runtime Library for Ubuntu18.04 (Deb)

- Copy the downloaded deb file to

./auxin the root directory.

In the Dockerfile:

- Copy the downloaded file from ./aux folder to the image.

- Install the package.

COPY ./aux/libcudnn7_7.6.3.30-1+cuda10.0_amd64.deb /tmp

RUN dpkg -i /tmp/libcudnn7_7.6.3.30-1+cuda10.0_amd64.debStep 3: Install Anaconda and Create the Work Environment

In the Dockerfile:

- Install

curlpackage. It is required to download Anaconda. - Download and install Anaconda.

- Initialize Conda bash shell integration

- Upgrade Conda with latest packages.

- Create the work environment.

# curl is required to download Anaconda.

RUN apt-get update && apt-get install curl -y

# Download and install Anaconda.

RUN cd /tmp && curl -O https://repo.anaconda.com/archive/Anaconda3-2019.07-Linux-x86_64.sh

RUN chmod +x /tmp/Anaconda3-2019.07-Linux-x86_64.sh

RUN mkdir /root/.conda

RUN bash -c "/tmp/Anaconda3-2019.07-Linux-x86_64.sh -b -p ${CONDA_PATH}"

# Initializes Conda for bash shell interaction.

RUN ${CONDA_PATH}/bin/conda init bash

# Upgrade Conda to the latest version

RUN ${CONDA_PATH}/bin/conda update -n base -c defaults conda -y

# Create the work environment and setup its activation on start.

RUN ${CONDA_PATH}/bin/conda create --name ${ENVIRONMENT_NAME} -y

RUN echo conda activate ${ENVIRONMENT_NAME} >> /root/.bashrcStep 4: Create the environment.yml File (with TensorFlow there)

In the current directory, create environment.yml file with the following content.

dependencies:

- pip

- pip:

- tensorflow-gpuStep 5: Apply the environment.yml File to the Work Environment

In the Dockerfile:

- Copy environment.yml to the image.

- Activate the work environment and update it with packages from environment.yml.

COPY ./environment.yml /tmp/

RUN . ${CONDA_PATH}/bin/activate ${ENVIRONMENT_NAME} \

&& conda env update --file /tmp/environment.yml --pruneStep 6: Create the Test to Verify if GPU is Available.

By this point, the Dockerfile is prepared. Now, let’s create a test that can be run inside the container to validate the installation.

On the development computer:

Create test-gpu.py file in the root folder with the following content.

import tensorflow as tf

print("GPU Available:", tf.test.is_gpu_available())In the Dockerfile:

In the Dockerfile, copy the test file to the image.

COPY ./test-gpu.py /rootBuild and Test the Image

Now let’s test the created image specification. Two options are presented below. The first one, test it manually via Docker cli app, and the second one, via Visual Studio Code using Remote - Container plugin.

NOTE: Before following the steps below, make sure you downloaded CuDNN and copied it to the aux directory as described above.

Option 1: Via Docker Cli Manually

- Build the image

docker build . -t anaconda-tensorflow2-gpu:latest- Run it

docker run --gpus all -it anaconda-tensorflow2-gpu:latest- Inside the created container run the following command

python /root/test-gpu.pyIf everything is good, you shall see the following output:

GPU Available: TrueOption 2: Via Visual Studio Code (VSCode) Using Remote - Container Extension

Microsoft has developed an extension for VSCode called Remote - Container. It allows to create development environments and use them inside Docker Containers.

For more information see Developing Inside Container.

Below is the brief steps description:

- Add ./.vscode/settings.json file with the following content.

{

"python.pythonPath": "/opt/anaconda3/envs/main/bin/python"

}While this step is optional, it will allow you to execute Python scripts without having to specify the interpreter. Also, you can add other VSCode settings here if you like.

- Install the “Remote - Container” extension for Visual Studio Code.

- Create .devcontainer.json file with the following content.

// See https://aka.ms/vscode-remote/devcontainer.json for the devcontainer.json format details or

// https://aka.ms/vscode-dev-containers/definitions for sample configurations.

{

"dockerFile": "Dockerfile",

"name": "anaconda-tensorflow2-gpu",

"extensions": [

"ms-python.python"

],

"runArgs": [

"--gpus=all"

],

"settings": {}

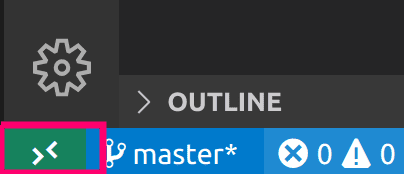

}- Open the Remote - Container extension dialog.

Click the green rectangle at the bottom left corner.

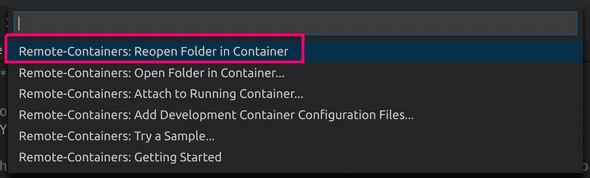

- Choose “Reopen Folder in Container” in the opened menu.

VSCode will build the image and launch a new development container. You will be able to run and debug your code inside the container using VSCode.

The next time if you open the folder in VSCode, repeat steps 4 and 5 or confirm if prompted to open the folder in the container.

- Execute the

test-gpu.pyscript as usual.

If everything is good, you shall see the following output:

GPU Available: True